I copied that table CSV from GIT and filtered it to generate plots of frequency versus "CORE_CEFF" for a couple of different "VRATIO" values.

This link declares:

https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.54.905&rep=rep1&type=pdf Power ~= VDD

2 x F

clk x C

eff where the effective switched capacitance, C

eff, is commonly expressed as the product of the physical capacitance C

L, and the activity weighting factor α, each averaged over the N nodes.

I don't know how you can identify what "CORE_CEFF" is in your processor, but the equation shows how that correlates to power. I.e. smaller Ceff equals lower power.

Then the plot looks meaningful since the lowest frequency is at the highest CORE_CEFF and the frequency climbs as CORE_CEFF gets smaller, up to some limit.

Since the largest value of CORE_CEFF in the table is 1.0, that would be the highest power condition presumably associated with the 160W power rating of the table/processor.

I could not figure out how to post an image of the graphs, nor will the forum let me post the XLSX file with base data plus graphing tab, since it is too big. So I deleted a bunch of rows from the base data that had "NEST_CEFF" > 0.25. This let me shrink the XLSX file enough to post it.

The first tab is the CSV data as posted. The second tab "Plotme" is a filter + graph that can be manipulated by the red-colored cells; one variable showing a big difference is the VRATIO which can be modified by adjusting the VRATIO_INDEX box in integer values from 0 to 23 (the table has entries for all of those). The other 2 tabs are copies of the Plotme tab with just the values & graphs at VRATIO=1.0 and VRATIO=0.7498; this let me save and review them side-by-side. You could probably get fancy and plot all the variations on a single graph but I didn't care to go that far.

I picked VRATIO=1.0 and VRATIO=0.7948 because the maximum frequency changes substantially between all those values, starting at 3.4GHz and climbing to 3.8GHz. You can play with the VRATIO_INDEX in the Plotme tab and see how the frequency curve continues to increase at different CORE_CEFF values, though always capped to that 3.8GHz.

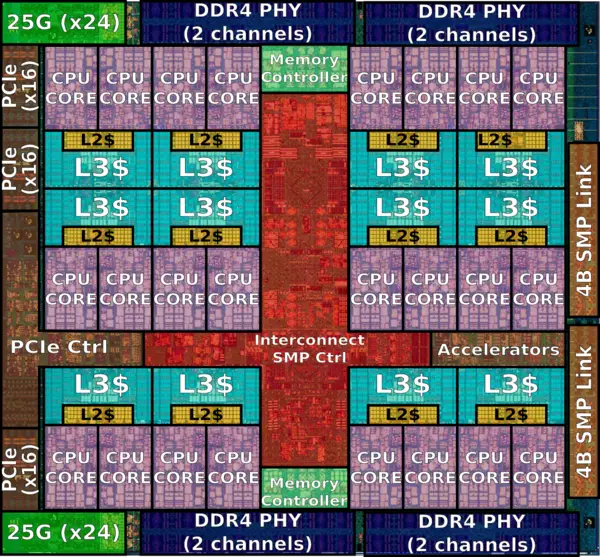

Raptor quotes the 190W 18-Core CPU as: 2.8GHz - 3.8GHz, so presumably you now have a 160W 16-Core CPU of 2.5GHz - 3.8GHz.

https://raptorcs.com/content/CP9M36/intro.html CP9M36

IBM POWER9 v2 CPU (18-Core)

18 cores per package

2.8GHz base / 3.8GHz turbo (WoF)

190W TDP

User @deepblue was running an 18-Core CPU on a Blackbird mainboard, though with extra cooling:

https://forums.raptorcs.com/index.php/topic,99.0.htmlHopefully you will find out if, long term, the Blackbird can handle a 16-core P9 when it matches the TDP of the supported 8-core version.

Nice work! Please report back in a few months....